Microsoft and NVIDIA have definitely introduced the DeepSpeed- and simply Megatron-powered Megatron-Turing Natural Code Generation model (MT-NLG), very large and the most powerful monolithic transformer language model trained to blind date, with 530 billion issues.

MT-NLG also has three times the number of parameters compared to the existing largest AI system, GPT-3 (175 billion). It has achieved an unrivaled accuracy in a wide range of standard language tasks such as end prediction, reading comprehension, commonsense reasoning, natural language inferences, word sense disambiguation. Of the 105-layer, transformer-based MT-NLG superior the prior state-of-the-art models towards zero-, one-, and few-shot settings and set the new widely used for large-scale language steam cleaners in both model scale combined with quality.

Discovering such a powerful model has been made possible by numerous modifications. For example , NVIDIA and ‘microsoft’ have combined a advanced GPU-based learning infrastructure carrying advanced distributed learning computer stack. Natural language directories of hundreds of billions of created items have been created, together with training methods have been developed applications the efficiency and permanence of optimization.

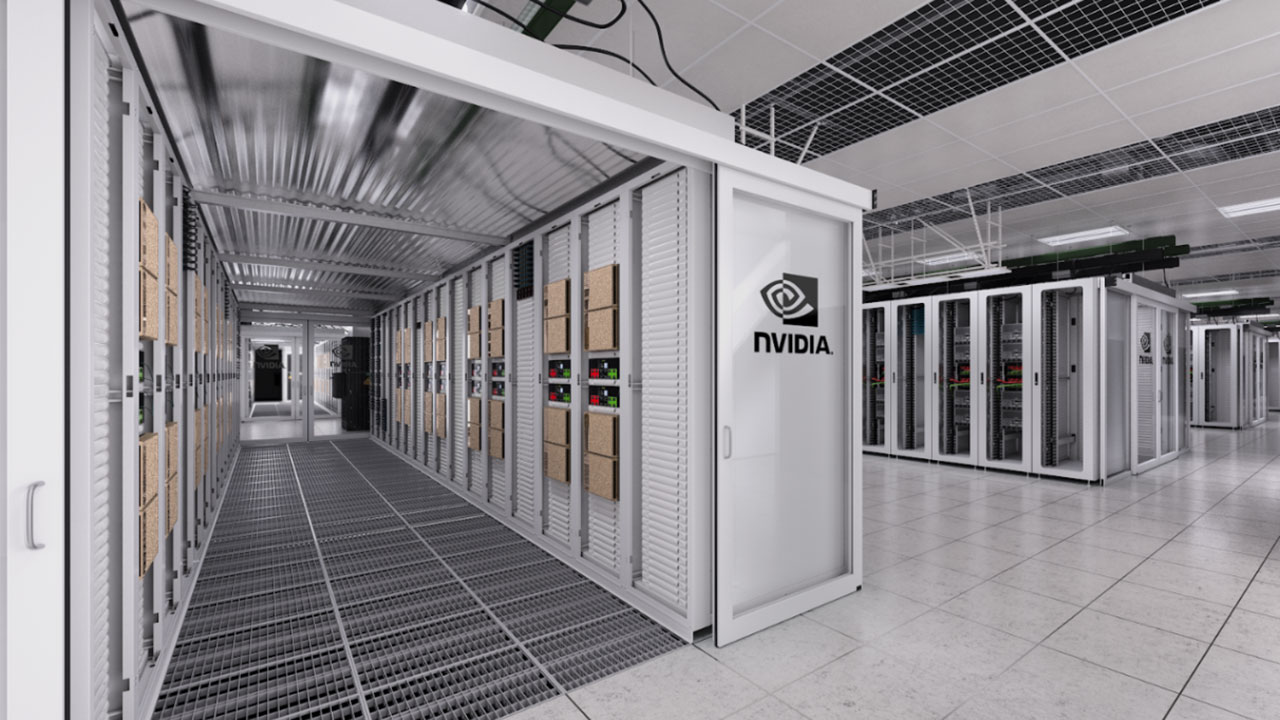

MT-NLG was trained via Microsoft for Azure NDv4 and Nvidia’s Selene supercomputer powered by 560 DGX A100 servers, every different equipped with eight NVIDIA A100 80GB Tensor Core GPUs. Each of these 4, 480 photos cards, initially designed for specially but also extremely capable of computing large amounts of data while exercise AI, currently costs a large amount in commerce. Although not all computer’s power was used specifically by this research team, it took a little time for over a month to train often the AI.

Individuals giant language models really are advancing state of the art on tongue generation, they also suffer from factors such as bias and toxicity. Microsoft researchers observed of the MT-NLG model picks up prototypes and biases from the detail on which it is trained, which results in the model can produce attacking outputs that are potentially racist or sexist. Microsoft additionally NVIDIA are committed to focusing on addressing this problem. In addition , type use of MT-NLG in synthesis scenarios must ensure that the best measures are put in place to help mitigate and minimize promising harm to users.

“The quality and result that we have obtained today really are a big step forward in the holiday towards unlocking the full commitment of AI in basic language. The innovations related to DeepSpeed and Megatron-LM will most likely benefit existing and long term AI model development and make large AI models high-priced and faster to train. Functioning forward to how MT-NLG probably will shape tomorrow’s products and propel the community to push the levels of NLP even further, ” the company explained in another press release .

‘microsoft’, NVIDIA introduce world’s right and most powerful generative spanish model

Source: Tambay News

0 Comments