Researchers at Cornell University’s Smart Computer Interfaces for Future Interactions (SciFi) Lab have developed sonar glasses that read their user’s silently spoken words.

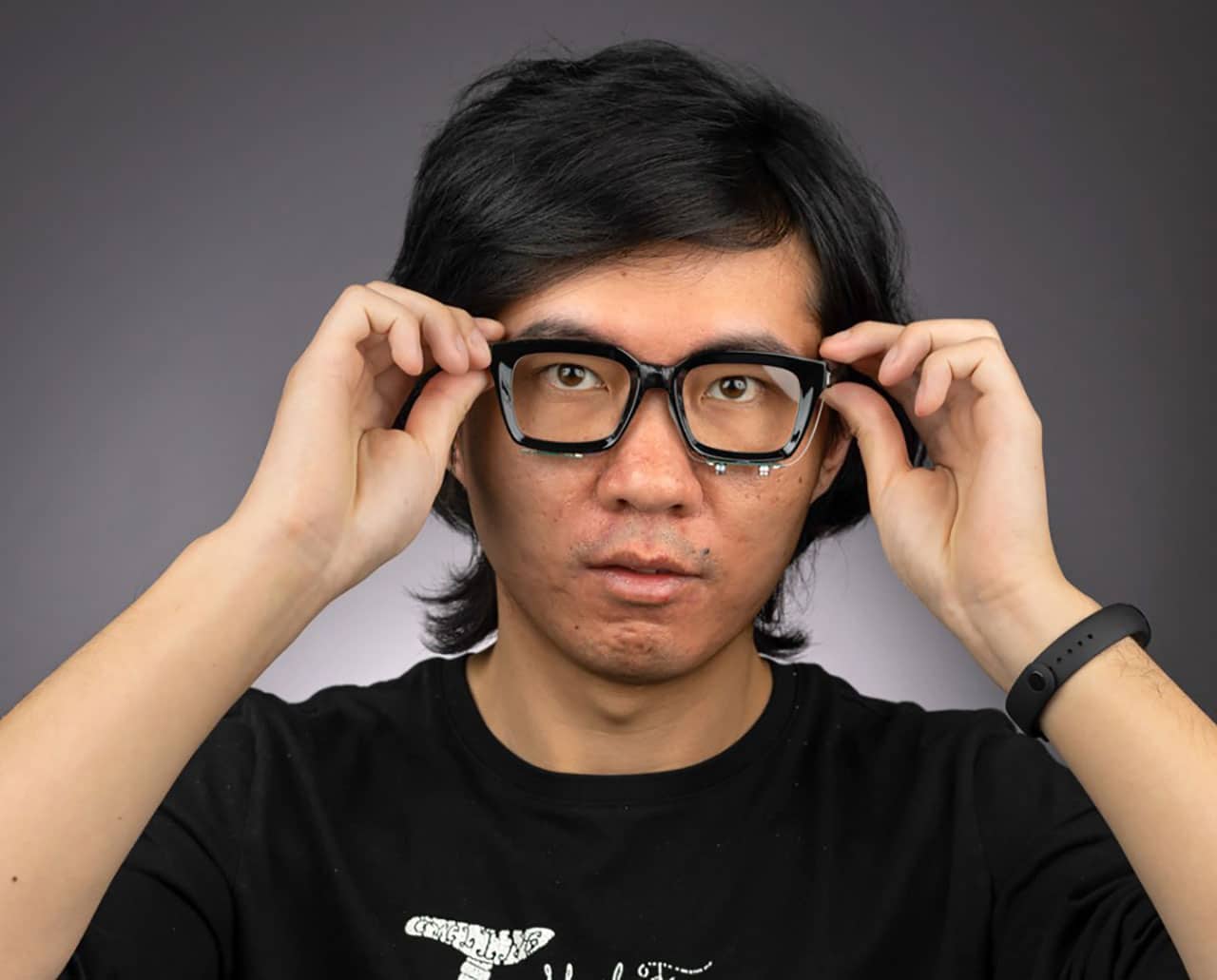

It’s the seemingly ordinary, off-the-shelf eyeglasses called EchoSpeech – a silent-speech recognition interface that uses acoustic-sensing and artificial intelligence to continuously recognize up to 31 unvocalized commands based on lip and mouth movements.

The low-power, wearable interface requires just a few minutes of user training data before it recognizes commands and can be run on a smartphone, researchers said.

“For people who cannot vocalize sound, this silent speech technology could be an excellent input for a voice synthesizer. It could give patients their voices back,” Ruidong Zhang, the lead researcher, said of the technology’s potential use with further development.

The EchoSpeech glasses are outfitted with a pair of microphones and speakers smaller than pencil erasers. This helps the wearable AI-powered sonar system send and receive sound waves across the face and sense mouth movements. A deep-learning algorithm then analyzes these echo profiles in real time with about 95% accuracy.

This kind of acoustic-sensing technology removes the need for wearable video cameras. Because audio data is much smaller than images or video data, it requires less bandwidth to process and can be relayed to a smartphone via Bluetooth in real-time.

“And because the data is processed locally on your smartphone instead of uploaded to the cloud,” said François Guimbretière, professor of information science at Cornell Bowers CIS and a co-author, “privacy-sensitive information never leaves your control.”

In its present form, EchoSpeech could be used to communicate with others via smartphone in places where speech is inconvenient or inappropriate, like a noisy restaurant or quiet library. The current version of the glasses offers ten hours of battery life for acoustic sensing versus 30 minutes with a camera.

The researchers are now exploring commercializing the technology behind EchoSpeech, thanks in part to Ignite: Cornell Research Lab to Market gap funding. In future work, the team is exploring smart-glass applications to track facial, eye, and upper body movements.

“We think glass will be an important personal computing platform to understand human activities in everyday settings,” Cheng Zhang said.

Researchers build sonar glasses that can read silent speech

Source: Tambay News

0 Comments